The Logic and Bias That Froze Menopausal Hormone Therapy

How the Women’s Health Initiative (WHI) Misled a Generation

By now, you’ve probably heard about the groundbreaking 2002 study that claimed hormone therapy for women was dangerous. In reality, the Women’s Health Initiative (WHI) became one of the greatest missteps in modern medical history. Its conclusions were overstated, misunderstood, and applied far beyond the data, causing harm to tens of millions of women (Dr. Attia, 60 minutes).

Despite progress, women’s health research remains underfunded, understudied, and often poorly designed. The gaps in research are historical, structural, and continue to this day.

The fallout has been immense, impossible to imagine, actually. Within months, hormone prescriptions plummeted. Millions of women entered menopause or continued aging without access to safe, effective treatments that could have eased suffering, prevented bone loss, reduced cardiovascular disease, and even prolonged life. The damage was not only personal but generational as families lost precious years of vitality, comfort, and connection with mothers, wives, sisters, and grandmothers who might have lived longer, healthier lives.

It’s difficult to overstate the scale of this mistake. The 2002 WHI didn’t just change a medical guideline, it reshaped how an entire society treated women’s health. The neglect that followed left a trail of unnecessary pain, disability, and early death. It deserves not only correction but acknowledgment, a collective pause, perhaps even a day or week of remembrance, for the women who endured needless suffering because science, culture, and bias collided in one historic failure.

This is actually a very common and growing problem that needs urgent and broad attention. We can and need to do better as a society when it comes to the application and scientific processes to the study of human health. Unfortunately, the current President is doing much more harm to women and Americans. We are heading backwards when we have the knowledge and experience to be moving so much further ahead.

In this article, I explain what went wrong. How is it that physicians, who mean well, could make such a colossal error with such horrible consequences?

I have extensive experience in scientific publication as both a published author and an investigator in scientific studies in psychiatry and social work. My background as a social worker includes formal training in research design and statistical analysis. As a psychotherapist, my expertise centers on cognitive distortions and other aspects of cognition, particularly their relationship to emotion, motivation, and behavior.

My interest is in understanding the possible thought processes that led to this disaster which can help us identify tactics to prevent it from happening again.

The Study that Stopped the Treatment

In July 2002, JAMA published the first results of the Women’s Health Initiative (WHI) estrogen-plus-progestin trial. Within weeks, prescriptions for hormone therapy (HT) plummeted by more than 70 %. Physicians stopped refilling, pharmacies saw cancellations, and women in the first decade of menopause, many in distress with vasomotor symptoms, insomnia, and anxiety, were told the therapy was unsafe.

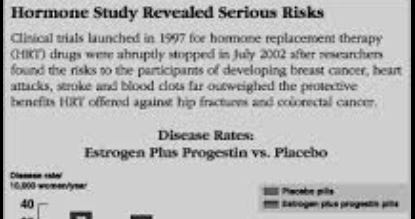

The published numbers appeared unassailable. The WHI enrolled 16,608 postmenopausal women aged 50–79 and compared a fixed daily regimen of conjugated equine estrogen (0.625 mg) plus medroxyprogesterone acetate (2.5 mg) against placebo. After roughly five years, investigators halted the trial early: breast-cancer hazard ratio 1.26, coronary-heart-disease hazard ratio 1.29, stroke 1.41, pulmonary embolism 2.13. The message: hormones cause heart attacks and cancer, traveled fast through corporate news networks like CNN, Fox, ABC and others.

But buried in the statistical tables was another story. Those hazard ratios* translated to 8 extra breast cancers and 7 extra coronary events per 10,000 women per year. In absolute terms, the risk rose from about 0.3 % to 0.38 % annually. Over five years, that equaled one additional case per roughly 250 women. The difference was statistically detectable but clinically small. The math was technically correct but the inference, or generalization was not!

The Math and the Miscommunication

The first logical error was base-rate neglect. I write about this here:

Base rate neglect is sometimes referred to Bernoulli’s fallacy. Relative risk ratios, 26% higher here, 29% there, sound alarming because they are proportional, not absolute. In a low-baseline context, a 26% relative increase can mean a tenth of one percent in absolute change. If 30 of 10,000 women on placebo developed breast cancer and 38 of 10,000 on hormones did, the relative increase is 26%, yet only eight additional cases exist. Unfortunately, Americans heard the wrong number, relative risk, and it sounded very scary! Also unfortunately, many doctors are didn’t understand the study either and took it at face value, telling their patients, that the risk of HRT was far higher than in reality.

That distortion deepened through Bernoulli’s fallacy: treating proportional changes as inherently meaningful without reference to the underlying real probability. The WHI authors reported ratios because they are standard in epidemiology, but NIH press materials and headlines presented those ratios as personal risk. The public heard “a 25%+ higher chance of cancer,” not “four-hundredths of a percent per year.”

The numbers also hid trade-offs. The same women had fewer hip fractures and fewer colorectal cancers. Yet risk communication collapsed into a single negative narrative: hormones equal cancer risk.

Sampling Bias by Design

The next study law was structural. The WHI cohort was not representative of typical HRT users. The average age was 63, often more than ten years past menopause. Many participants were overweight, hypertensive, or already showing vascular disease, the very conditions that magnify cardiovascular risk. The trial was designed as a chronic-disease-prevention study, not as a symptom-management study for newly menopausal women. The Women’s Health Initiative wasn’t built to test whether hormone therapy helps women going through menopause manage hot flashes, sleep problems, or other common symptoms. Instead, it was built to ask a different question: Can hormones prevent heart attacks, strokes, and cancer in older women over many years? In epidemiologic terms, this was selection bias. In sociologic terms, it was a design blind spot: the question was asked of the wrong population. Younger women, for whom hormones are typically prescribed, were underpowered subgroups.

When researchers looked at all the women in the study as one big group, the average results showed more heart attacks, strokes, and breast cancers among those taking hormones. That overall average, called the aggregate result, was what got all the attention. But that single number mixed together very different kinds of women:

Some were in their 50s and just entering menopause.

Some were in their 70s and hadn’t had a period in twenty years.

Some were healthy.

Some already had clogged arteries or high blood pressure.

The younger, healthier women, the ones most like typical hormone-therapy users, were a smaller part of the total. When you average everyone together, their safer outcomes get diluted by the higher risks seen in older participants. Statistically, that means the good results in the smaller group were “drowned out” by the larger, riskier group. It’s like mixing a few cups of clean water with a bucket of muddy water, the whole bucket still looks dirty, even though part of it was clear.

Because the main WHI analysis focused only on the overall average, it masked the fact that timing matters: starting hormones early after menopause can be safe, while starting decades later may not be.

Post-Hoc Subgroups and the Timing Hypothesis

Only after publication did investigators, including Jacques E. Rossouw and co-authors Garnet L. Anderson and Robert L. Prentice, revisit the data and stratify by age and years since menopause.

They discovered that women aged 50–59 or within ten years of menopause had neutral or even favorable cardiovascular outcomes, the so-called timing hypothesis. And this was consistent with the Grodstein study.

But these were post-hoc findings. These are results that are discovered after the main study was completed. They were not part of the original study plan and the trial was not designed or large enough to test smaller groups with confidence (run inferential statistical analysis, meaning that you cannot generalize the data from the study to other people outside of the study). Analyses like that are called post-hoc subgroup analyses. Post-hoc analysis is useful for exploration, that is, to generate new hypotheses for future research, but should not be treated as proof of cause and effect!

When researchers go back into a large dataset and start slicing it into dozens of smaller subgroups, by age, weight, years since menopause, or any other factor, they increase the odds of finding patterns that happen purely by chance. This problem is called multiple-comparison bias or data-dredging. The more comparisons you make, the more likely you’ll find something that looks statistically significant but actually is not. In simple terms, it’s like flipping a coin a hundred times and then noticing that one stretch of ten flips landed heads seven times, you might think you’ve found a pattern, but it’s just random chance.

So while those post-hoc analyses about “timing” later turned out to be credible, they could not on their own be used to prove anything. Real confirmation required new, independent studies, such as KEEPS and ELITE, which were specifically designed to test that idea from the start. KEEPS and ELITE were follow-up randomized controlled trials designed to test what the Women’s Health Initiative (WHI) could not: whether the timing of starting hormone therapy affects cardiovascular and other health outcomes. They were smaller, tightly controlled, and enrolled younger women closer to menopause, the group mostly missing from the WHI.

Despite that, the WHI leadership used the reanalysis both ways: as explanation (“older women differ”) and as new recommendations (“younger women may be safer”). That dual use blurred the line between hypothesis generation and validation which is a good example of analytic scope creep (yes, that’s a real phrase that’s been around a long time). In other words, its just not a valid use of the data.

Logical Fallacies Layered on Methodology

Many physicians lack formal training in logical fallacies, and research processes do not always address these issues during the study design process. Peer reviewers may not readily see the errors either.

The WHI’s interpretation of their data demonstrates quite a few logical fallacies or errors in reasoning:

Hasty generalization: one drug combination, one delivery route, one population generalized to all forms of hormone therapy. One drug combination was not representative of all the options or possible interventions over time.

Ecological fallacy: population-level trends applied to individual clinical decisions. This is why BMI does not predict an individual’s risk, just a group.

Framing bias: defining “failure to prevent” as “proof of harm.”

Confirmation bias: results read through a prior expectation that earlier observational benefits were spurious.

Causal overreach: association treated as universal causation, ignoring pharmacologic and physiologic heterogeneity.

Each bias compounded the others, turning a statistically limited observation into a sweeping rule.

The Epidemiologic Orthodoxy

Rossouw’s intellectual posture did not originate with WHI. Before 2002, he was known inside the NHLBI for his role in lipid and diet trials such as the Lipid Research Clinics Primary Prevention Trial. His orientation was classic preventive cardiology, population first, safety first, skepticism toward any therapy lacking randomized evidence.

The researchers running the Women’s Health Initiative believed that the only kind of study you could really trust was a large, randomized clinical trial, where people are randomly assigned to take a treatment or not. They thought that other kinds of research, like long-term observational studies that simply follow people and record what happens, weren’t reliable enough to prove anything.

So when earlier studies showed that women taking estrogen seemed to have fewer heart problems, these scientists assumed it wasn’t the hormones helping that it was probably that those women were healthier to begin with (“healthy-user bias”).

Because they already doubted those earlier results, they designed the WHI in a way that would challenge or disprove them. In other words, the study was built with a mindset of skepticism first, not open-ended curiosity, and that belief shaped how the whole project was done and later interpreted.

That cautious way of thinking helped prevent scientists from getting too optimistic about treatments that might not really work, but it also made them too rigid. Once the big study (the WHI) showed that hormones looked harmful overall, the researchers assumed that result was the final word.

Because the study was large and randomized, researchers treated it as more trustworthy than any other kind of evidence. So, instead of asking important follow-up questions, like “Which women might still benefit?” or “Does it matter what kind of hormone or how it’s taken?: They dismissed everything else as less credible. This mindset shut down nuance and left doctors and patients with a one-size-fits-all warning that didn’t match the real, more complicated truth.

This article from Yale Medicine explains why you can’t use BMI to predict the health outcomes of an individual. This is similar the problems of the WHI study.

Culture, Hierarchy, and Gender

Rossouw’s individual stance played out on an institutional stage. NIH research in the 1990s remained dominated by senior male researchers and a biomedical hierarchy that viewed women’s-health interventions through a cardiovascular lens rather than a quality-of-life lens. From that framework, menopause was not a physiological transition to be managed but a risk vector to be controlled.

Public-health paternalism reinforced the frame that it was better to withhold therapy than risk harm, even when the therapy’s main purpose was symptom relief. The WHI’s funding rationale, testing HT as disease prevention, embodied that idea. Relief of hot flashes or insomnia did not count as a legitimate outcome in the cost-benefit equation.

This cultural backdrop shaped the interpretation of the study results. A predominantly male leadership, including Rossouw, Robert Prentice, and other senior statisticians, held epistemic authority over data generated from female participants. When the trial was stopped, the message was broadcast from that same hierarchy: that “hormones are dangerous.” Few women clinicians were visible in the first press conferences or early policy briefings.

The point is not personal accusation but structural observation (the stage the researchers are acting on, so to speak). The research stage itself, its hierarchies, funding incentives, and epistemic priorities, predisposed the performance. Even if other research assistants noticed problems with study design or logic, would they have felt comfortable expressing their concerns; if they did express their concerns, would they have been taken seriously?

Confirmation Bias Masquerading as Scientific Rigor

When the WHI contradicted two decades of cardioprotective findings from the Nurses’ Health Study and similar cohorts (yes, you read that correctly, citation at end of this article), the investigators’ response was not curiosity but closure. The logic inside the NIH’s preventive-medicine culture was simple, that randomized evidence equals truth , and observational evidence equals error. Once the WHI’s aggregate data showed harm, that worldview was confirmed, ironically, through confirmation bias itself.

Rather than exploring why results were contradictory to major, prior studies, many analysts assumed the earlier data were fatally confounded by “healthy-user bias.” Yet the demographic contrast between the studies was more than obvious: the WHI’s mean age was 63, while most women in the observational data began therapy around 50.

In effect, the WHI tested a different question: what happens when older women with subclinical vascular disease start hormones years after menopause, and then generalized the answer backward to healthy 50-year-olds.

That kind of thinking wasn’t true scientific curiosity, it was all-or-nothing thinking. Instead of asking “Where does this new result fit in?” researchers assumed the big WHI study had completely disproved everything that came before it. In reality, the WHI should have helped define the limits of earlier findings, not erased them. But science at the time operated like a pecking order: big randomized trials were seen as the ultimate truth, and every other type of evidence was automatically treated as inferior.

So, when the WHI results didn’t match earlier studies, the assumption was that the earlier research must have been wrong, rather than asking whether both could be right in different contexts. This was confidence masquerading as scientific caution: a belief that one kind of rigor cancels all others. The real error was not discovering that observational data had limits, it was refusing to ask what those limits revealed. That blind spot allowed methodological pride to stand in for scientific humility.

Institutional Confirmation and Media Amplification

Once the conclusion aligned with institutional caution, confirmation bias extended beyond science to bureaucracy and journalism. NIH press releases highlighted the relative-risk percentages without denominators. News outlets repeated them verbatim. Editors favored alarming headlines because “harm” stories draw clicks and citations.

This was negativity bias layered onto availability bias: vivid threats crowd out proportional reasoning. Within months, major medical societies issued blanket warnings. Primary-care physicians, lacking time to parse hazard ratios, adopted a rule of avoidance.

The paradox: by protecting against hypothetical cardiovascular harm, the system created real suffering from undertreated menopausal symptoms, bone loss, and sleep disturbance.

The Aftermath in Numbers

Prescription decline: from ~90 million HRT prescriptions in 2001 to <30 million by 2003. Over 60 million women!

Symptom burden: surveys showed over half of abruptly discontinued users reported return of moderate-to-severe hot flashes.

Fracture outcomes: subsequent epidemiologic studies suggested an uptick in hip-fracture incidence after mass discontinuation.

When researchers went back and reanalyzed the WHI data years later, they found that women who took estrogen didn’t die any more often than those who didn’t. In fact, among women in their 50s, the data suggested they might have been less likely to die from any cause, their overall relative risk death rate was about 30% lower than women who took no hormones (absolute terms: 13 fewer deaths per 10,000).

Later randomized trials, KEEPS (2012) and ELITE (2016), specifically tested younger women and confirmed the timing hypothesis: early initiation within ten years of menopause yields neutral or favorable vascular outcomes.

In other words, when the proper population and formulation were studied, the supposed universal risk vanished.

Lessons in Scientific Humility

The WHI remains one of the most expensive clinical trials in history, over $600 million and an instructive case study in how valid data can be framed into invalid inference. The lesson is not to distrust large trials but to recognize the structure of bias around them: design assumptions, institutional incentives, cultural narratives, communicative shortcuts and other problems or challenges.

Science aspires to objectivity, yet every trial is staged within human systems. The WHI’s rigor could not compensate for its context: an epidemiologic culture that valued population protection over personal relief, that equated uncertainty with danger, and that assumed male-coded detachment to be neutrality.

Toward Better Frames

Correcting the record requires more than re-running numbers. It requires changing the frame.

Specify subgroups ahead of time (prospectively). When planning a study, build it around how the body actually works, not around what’s easiest or cheapest to test.

Report absolute and relative risk together. Numbers without denominators invite distortion and sensational headlines!

Include patient-centered outcomes. Quality of life is not just an anecdote, it is real data.

Diversify leadership. When those who design and interpret research reflect those studied, framing errors diminish. For example, groups being researched need active representation throughout the research process.

Modern guidelines from NAMS and ACOG now state clearly: for healthy women under 60 or within 10 years of menopause, hormone therapy remains the most effective treatment for vasomotor symptoms and carries low absolute risk when individualized. That nuanced message could have been the WHI’s conclusion in 2002, if its stage the researchers on had allowed for it.

The Women’s Health Initiative changed interventions for tens of millions of Americans, not because of data but because of context. A study designed to test prevention in older women became, through logical fallacy and institutional bias, a referendum on all hormone therapy.

Rossouw and his colleagues were products of a system that equated restraint with virtue. Their caution was over zealous. They saw certainty through randomization and ended up with a grand mistake, where HT was misdiagnosed as harmful and the study itself misdiagnosed as helpful.

The result was a decade of fear, suffering, and, ultimately, loss of trust. The lesson is timeless: evidence never speaks for itself, it speaks through its interpreters and through the stage on which it stands. Scientific data are filtered through human judgment, institutional culture, and social context.

For that reason, every scientist must remain acutely aware of the many forces that shape interpretation: personal bias, disciplinary tradition, funding incentives, media pressure, and cultural framing. True scientific rigor requires not only careful measurement but self-examination, a constant vigilance against the subtle ways that context can bend perception and distort truth.

__________

Statistics explained:

*A hazard ratio tells you how much more (or less) likely something is to happen in one group compared to another, but over time.

Here is an example:

If two groups of people are being studied and compared, one takes a new medicine and one doesn’t, and the hazard ratio is 2, that means the event (like a heart attack) happens twice as often in the medicine group as in the other group. If the hazard ratio is 0.5, it means it happens half as often. It’s like comparing how fast two groups reach a goal or a problem, like getting cancer or heart disease or even dying.

Relative risk compares how likely something is to happen in one group versus another.

Absolute risk tells you the actual chance of it happening at all because it includes what is called the “base rate.” Base rate is like asking, how often do people actually get struck by lightening compared to asking, how often do golfer get struck compared to people going for a walk in their neighborhood. If the base rate is 1 in a million, but golfers get struck 4x as often as walkers, then it could sound like golfing is far more dangerous than walking, but it doesn’t happen often to begin with for either situation.

Example: In one group, 2 out of 100 people get sick. In another group, 1 out of 100 people get sick.

Relative risk: 2 ÷ 1 = 2, so the first group has twice the risk as the second.

Absolute risk: 2% vs. 1%, so the difference is only 1%.

So: Relative risk sounds big (“twice as likely”). Absolute risk shows the real numbers (“1% higher chance”).

Scientists use both to show the difference between how big a change sounds and how big it really is.

_____

Citations:

Grodstein F, Stampfer MJ, Manson JE, et al. Postmenopausal estrogen and progestin use and the risk of cardiovascular disease. New England Journal of Medicine. 1996;335(7):453–461. DOI: 10.1056/NEJM199608153350701

JAMA, 2002 WHI main report. https://jamanetwork.com/journals/jama/fullarticle/195120

Annals of Internal Medicine, 2012 (KEEPS). https://pubmed.ncbi.nlm.nih.gov/31453973/#:~:text=Abstract,breast%20pain%2C%20or%20skin%20wrinkling.

NEJM, 2016 (ELITE). https://www.nejm.org/doi/full/10.1056/NEJMoa1505241

NAMS and ACOG current position statements. https://www.acog.org/womens-health/faqs/hormone-therapy-for-menopause#:~:text=You%20and%20your%20ob%2Dgyn,the%20risk%20of%20colon%20cancer.